There is a trap that many organizations new to risk quantification and FAIR fall prey to. One that I’ve seen lead to excessive time spent on analysis work, missed deadlines, SME burnout and overall frustration in the quantitative risk analysis process.

The trap…not right sizing precision for your organization.

Now although we at RiskLens always advocate for, and train organizations to value accuracy over precision, it seems that most of us, at least at first, have a difficult time taming the Uncertainty Siren, and her calls for greater and greater levels of precision.

At first hearing, the call is familiar and makes sense. “Of course, I want to use the most precise data I can find to exactly represent this risk to our organization.” Almost without thinking, the newly minted quantitative risk management team begins to scour the organization for the exact data points, tracking down, and holding sessions with SMEs and asking if they can pull data from various security tools.

For some, they will easily find a plethora of data, and eager SMEs willing to help them achieve their level of precision. Yet for many others, the precision thought bubble that formed at the onset of the engagement is popped when they come face to face with their organization and it’s limitations.

I want to make it clear here that precision is not the problem. Organization’s should aspire to greater degrees of precision and outline a road map for achieving said precision.

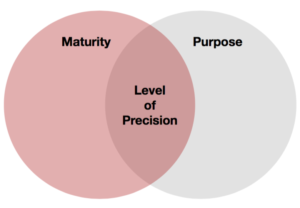

The problem as I see it, is that prior to implementing a quantitative risk management program, few organizations consider how precise they can get in light of the following two items:

What we are hoping to achieve (i.e. the purpose or goal of our risk analysis work)

- What decisions are we hoping to inform?

- Will we be conducting more strategic (i.e. top ten risk analysis, risk appetite) or tactical (ROI on X security initiative) risk analysis work?

- What does our audience expect to see?

Compared against

Level of maturity

- Do we have visibility into our assets, processes and threats?

- Do we have tools in place that can readily access data (logs, IDS/IPS, DLP, etc.)

- Do we have solid existing relationships with both IT/IS and Business SMEs, or is this something we’ll need to cultivate?

The cross section between these two elements should shine a light on the current level of precision that is achievable for your organization.

The cross section between these two elements should shine a light on the current level of precision that is achievable for your organization.

The benefits of taking this approach:

- You define success and right size the quantitative risk management program for your organization.

- Regardless of the level of precision currently feasible at your organization, you are always leveraging FAIR, a sound and rigorous framework for breaking down risk. THIS IS FOUNDATIONAL.

- Understanding and acknowledging ahead of time that you’ll be leveraging data points with lower degrees of precision should foster quicker risk analysis work as you’ll be spending less time hunting for additional data.

Again, I’m not advocating for complacency when it comes to gathering data points and developing estimates. All organizations should aspire to getting better, and moving their organization along the maturity spectrum.

What you should do is understand where you’re at in your quantitative risk management journey and define success accordingly. This will absolutely reduce frustrations and increase motivation across the program.

Related:

Pitfalls of Overly Precise Cyber Risk Value at Risk Model

How to Get Better Risk Analysis Results by Focusing on Probability vs Possibility